This is kind of a silly and duplicative post, but I spent too much time searching for the right answer, so maybe this will help the right course of action bubble to the top faster in the future.

The Issue

I was trying to run a query on my local SQL install (whatever MAMP manages and provisions) using MySQL Workbench 6.3 for Mac but kept getting a timeout error.

![]()

The query itself wasn’t overly complex, but I was using aggregate functions, group by, and a join to consolidate a dataset. I’m working with distance education reporting data for all U.S. colleges and universities from 2012-2015, so this join involved a 7K row table and another with 25K rows, so not inconsequential but also not BIG data level.

SELECT STABBR as State, EFDELEV as Level , SUM(EFDETOT) as Total_Distance, SUM(EFDEEXC) as Exclusive_Distance, SUM(EFDESOM) as Some_Distance, SUM(EFDENON) as None_Distance FROM hd2012 LEFT JOIN ef2012a_dist_rv ON hd2012.UNITID = ef2012a_dist_rv.UNITID GROUP BY State, Level;

I did some initial googling on the error code, but it is a pretty general error code, so it was difficult to be sure whether this was a limitation of SQL or the Workbench DBMS. I read a few posts that suggested manipulating some of the .conf files for the underlying MySQL install, and I went too long down this road before trying something in Workbench itself.

It turns out there are timeout settings for the DBMS that you extend to make sure that it waits a sufficient amount of time for your query to return data. Thanks to this specific answer on StackOverflow, but the description of “how-to” it links to is no longer valid, hence this blog post.

The Fix

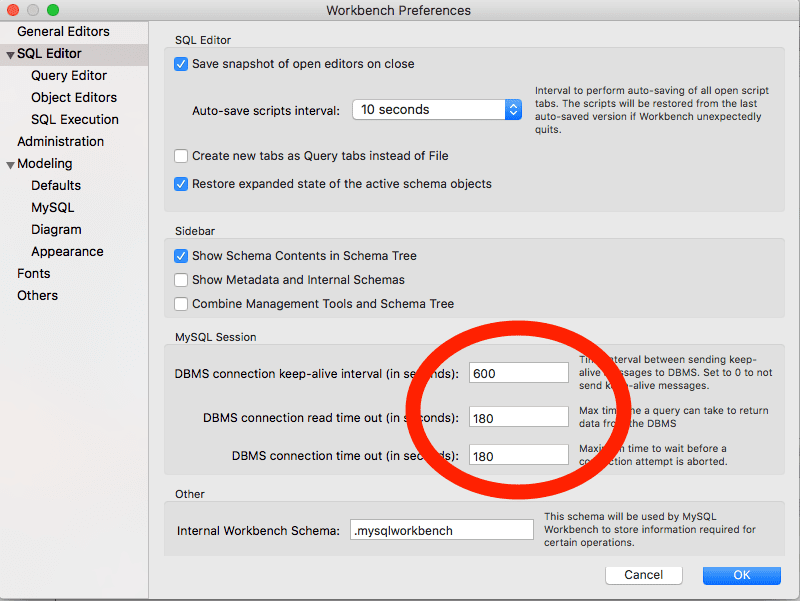

There is a quick setting in Preferences that helped me. As you might expect, the DBMS has settings to manage its connection to the SQL server. In my case, those were just too short for my long running queries.

I changed the 30 second defaults to 180, and returned the data I needed. However, I’d imagine that some things would call for a much higher timeout, especially if you wanted to do a lot of transactions.

Another Fix

As of 08/27/2018, I did some additional noodling around with the queries that produced this slow result and realized some simple indexing reduced the query time from ~50 seconds to .227 seconds. You can find a more detailed post about that here.

If you are looking for a way to stop the timeout error, now you have two options. However, now I realize that most of my issue had nothing to do with MySQL Workbench and everything to do with the way I constructed the underlying database : ) However, options are always good, so good luck!

Nice !

It’s not working in my case.

Not sure how big your data set is, how complex the query is, or how powerful your machine is, but have you tried increasing the timeout limit higher than 180 secs?

I did some more work on the project that created the slow queries and indexing was the ticket. Maybe that would help in your case: https://jeffreyeverhart.com/2018/08/27/using-the-right-tools-indexing-in-mysql/

its not working , i put 6000 seconds 🙁

Wow, does it run for the whole 6K seconds and then timeout? What type of dataset are you working with and what type of machine are you using? If it’s that big of a data set it might be time to look into come cloud options that would let you buy a massive machine for a few hours to crunch your numbers. Also possible to write a script of some kind to run the query.

Since the timeout is more a function of MySQL Workbench than the server, that should churn until the results finish. Let me know how it goes. -JE

I did some more work on the project that created the slow queries and indexing was the ticket. Maybe that would help in your case: https://jeffreyeverhart.com/2018/08/27/using-the-right-tools-indexing-in-mysql/

I got the same problem right now. I also worked on indexing and the same techniques i`ve read on stackoverflow but still its losing connection. its all suddenly became like that. even though we already tested it already on the production server working its working fine. then right now i am having a hard time to solve this

Oh wow, that’s a bummer. I’m not sure I have a good path forward for you, but what I might do is trying to run the query either through your programming language or directly through the command line. I think that would at least give you a little bit more information about where the issue is, whether it’s the management software or whether it’s the query.

I’m giving this a try now. What I’m trying to do is increase a VARCHAR size on a table with close to 20 million rows. It just takes time for the alter table function to copy that many rows. I may have to manually copy a new table to copy the data to it.

Oh, wow. That’s a big data set. Best of luck!

Thank you!

You’re welcome! Thanks for reading, JE

Took 3 1/2 minutes to run the query. And yes, I know this is not acceptable! But for the sake of testing, awareness, and future scalable calculations etc etc. It will assist to formulate an acceptable solution. Thx!